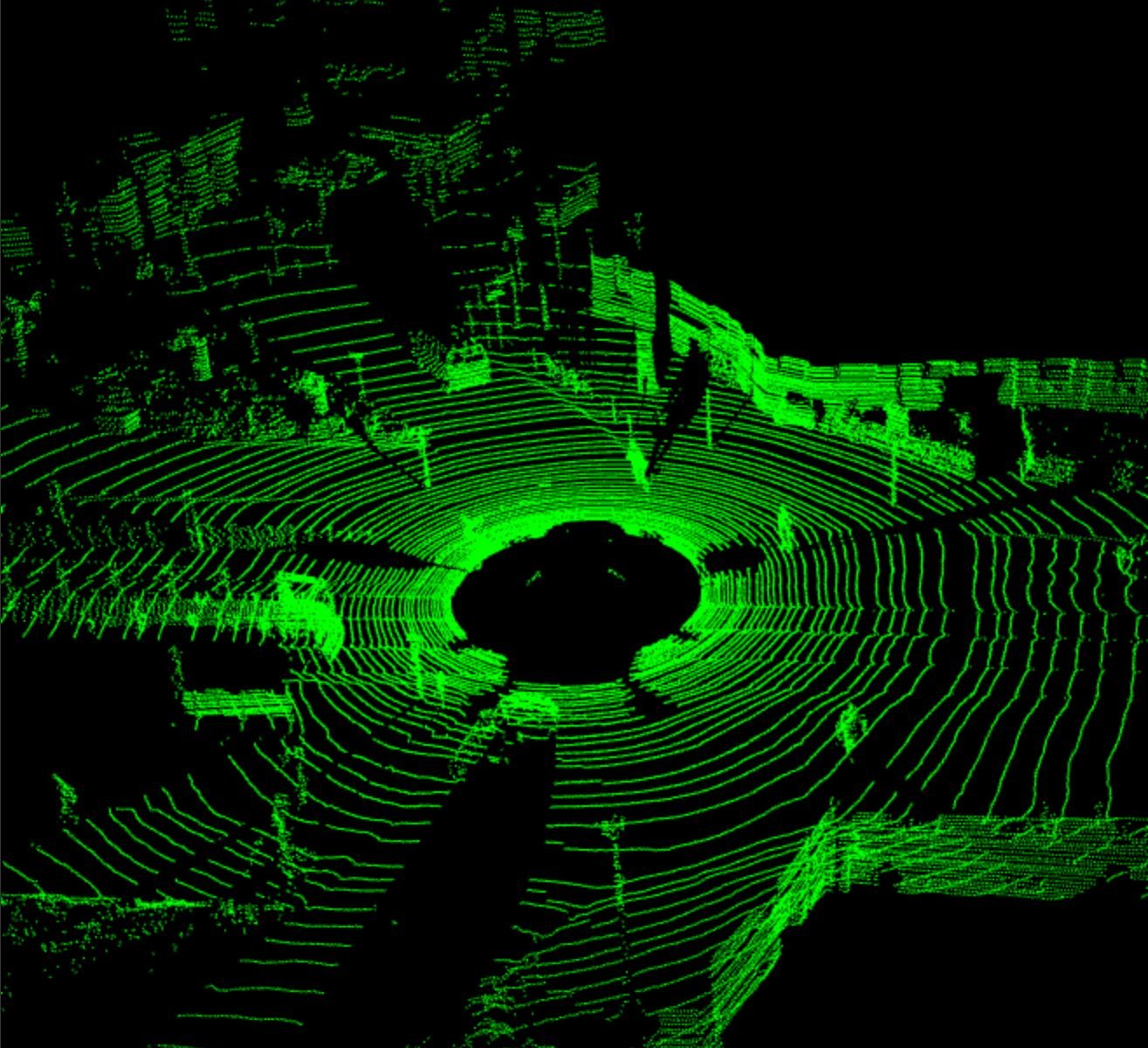

AI-Powered LiDAR Annotation & 3D Perception Platform

From raw point clouds to production-ready datasets — accelerate your perception AI with automated labeling, calibration, and fusion workflows for autonomous vehicles, robotics, and defense.

Turning Raw LiDAR Into Reliable Intelligence

Autonomous vehicles, robotics, and geospatial systems see the world in points — millions of them per second.

JTheta.ai transforms that complexity into clarity.

Our AI-assisted LiDAR & 3D Perception Platform helps your teams convert massive point clouds into structured, model-ready data — faster, smarter, and at scale.

✅ AI-powered annotation, tracking & calibration

✅ End-to-end integration with ML pipelines

✅ Designed for automotive, robotics, defense & mapping use cases

Why 3D Annotation Is Hard — and How JTheta Solves It

The Challenge |

LiDAR data is unstructured and hard to interpret. |

Maintaining temporal consistency across frames is time-consuming. |

Sensor fusion requires precise cross-modal alignment. |

Manual calibration causes drift and data loss. |

Most tools are 2D-centric. |

How JTheta Solves It |

AI-assisted segmentation and intelligent object clustering simplify labeling. |

Automated interpolation and motion tracking ensure smooth propagation. |

Calibrate LiDAR, radar, and camera streams with sub-centimeter precision. |

Built-in alignment and drift correction tools maintain dataset fidelity. |

JTheta is purpose-built for 3D spatial annotation — with depth, geometry, and context awareness. |

Platform Capabilities — Built for Real-World Perception

Capability |

3D Bounding Boxes & Segmentation |

Frame Tracking & Interpolation |

AI-Assisted Pre-Labeling |

Sensor Fusion Support |

Calibration & Synchronization |

Quality Control & Reviewer Workflows |

Flexible Deployment & Export Formats |

Description |

Annotate vehicles, pedestrians, and other objects with cuboids or per-point semantic masks. |

Propagate annotations across LiDAR frames using AI-based motion prediction. |

Built-in models auto-generate labels, reducing manual effort by up to 70%. |

Overlay LiDAR with camera and radar data for unified multi-modal annotation. |

Auto-correct intrinsic/extrinsic parameters for sub-centimeter precision. |

Multi-stage QA, audit tracking, and discrepancy resolution dashboards. |

Deploy cloud, hybrid, or on-prem; export to KITTI, JSON, ROS, or PCD. |

From Sensor to Model — The End-to-End Workflow

Why Teams Choose JTheta.ai

1️⃣ Enterprise-Scale Performance

Handle millions of point clouds with distributed processing, data versioning, and intelligent batching.

2️⃣ Continuous Learning Feedback

Integrate model feedback directly into annotation loops — smarter pre-labels every iteration.

3️⃣ Cross-Project Intelligence

Global ontology learning improves consistency across every new dataset.

4️⃣ Flexible Infrastructure

Deploy on private cloud, hybrid, or fully on-prem — with full data traceability.

5️⃣ Compliance & Security

HIPAA-ready, ISO-aligned, and enterprise-grade for automotive, defense, and industrial use.

Business Impact & ROI

From 10,000 frames to 10 million — JTheta delivers precision, compliance, and scale.

Outcome |

Annotation Efficiency |

Quality Improvement |

Faster Time-to-Market |

Lower Cost of Operations |

Effortless Scalability |

Impact |

Cut labeling time by up to 70% with AI pre-labeling and smart propagation. |

Reduce human error through automated QA and reviewer alignment. |

Accelerate perception model deployment up to 3×. |

Automate repetitive labeling tasks and scale efficiently. |

Expand from pilot to enterprise workloads without changing infrastructure. |

Trusted by Teams Working On

🚗 ADAS & Full Autonomy Development

🤖 Robotics & Drone Navigation

🏙️ Smart Infrastructure & Industrial Automation

🗺️ Geospatial & Surveying Applications

🛡️ Defense & Security AI

Experience JTheta LiDAR in Action

Transform your LiDAR data into model-ready intelligence.

See AI-assisted annotation, calibration, and automation in a live demo.

FAQ

What is LiDAR annotation?

LiDAR annotation is the process of labeling 3D point cloud data generated by LiDAR sensors. It involves identifying objects such as vehicles, pedestrians, or road elements and representing them as 3D bounding boxes, polygons, or semantic masks. These annotations are essential for training perception models in autonomous systems, robotics, and geospatial intelligence.

How does JTheta.ai’s LiDAR platform improve accuracy?

JTheta.ai integrates AI-assisted pre-labeling, automated calibration, and motion-based interpolation to ensure spatial and temporal consistency. Our multi-reviewer workflows and automated QA further enhance precision across millions of frames.

Does JTheta support sensor fusion?

Yes. JTheta.ai supports LiDAR, camera, and radar fusion. The platform aligns multi-modal data streams using sub-centimeter calibration accuracy, enabling holistic perception model training.

Can JTheta be deployed on-premises?

Absolutely. JTheta.ai offers full deployment flexibility — cloud, hybrid, or on-prem — with complete control over your data pipeline, making it ideal for defense, healthcare, and automotive enterprises that require data sovereignty.

What export formats are supported?

You can export labeled data in multiple formats including KITTI, PCD, JSON, and ROS, compatible with popular machine learning frameworks like PyTorch, TensorFlow, and Open3D.

What industries benefit most from JTheta’s LiDAR Platform?

JTheta.ai is built for autonomous vehicle developers, robotics teams, smart infrastructure projects, mapping & surveying organizations, and defense R&D groups — any domain where 3D perception is mission-critical.

How does JTheta ensure compliance and security?

The platform is designed to meet HIPAA and GDPR standards, supports encryption at rest and in transit, and provides full audit trails and data lineage for enterprise and defense-grade compliance.