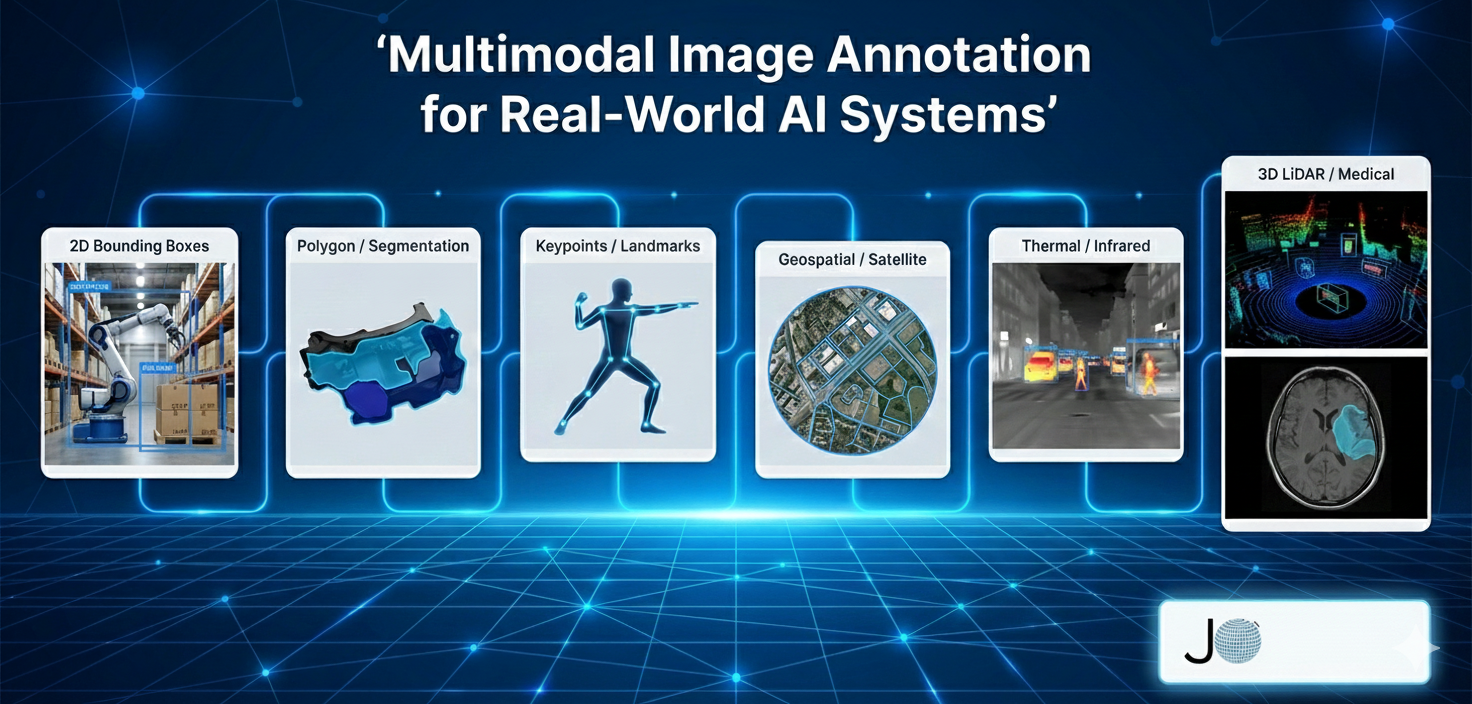

General Image Annotation & Multimodal Vision: Why JTheta.ai is Built for Real-World AI Systems

In a world where AI is no longer limited to simple classification — where robots, surveillance systems, medical tools, and autonomous agents interpret not just photos but varied image modalities — annotation needs have become more complex. At JTheta.ai, we built our platform with that complexity in mind. We support a wide set of annotation modalities and types to meet real-world AI demands, from 2D images to infrared maps, geospatial imagery, LiDAR/3D, and medical scans.

Below, we explain how general image annotation — under the umbrella of multimodal annotation capabilities — powers modern AI pipelines. We also show how JTheta.ai’s technical design and workflow features address the challenges of building robust datasets for research or production use.

Understanding Annotation Modalities & Why They Matter

Depending on your AI task, you may need different annotation types. Common ones include:

- Bounding Boxes — annotate objects with rectangular boxes; ideal for object detection and localization tasks

- Polygons / Polylines — outline irregularly shaped objects, allowing better shape representation than simple boxes. Useful for segmentation of non-rectangular objects.

- Keypoints / Landmarks — mark specific points of interest (e.g. joints, landmarks, corners). Important for pose estimation, landmark detection, feature-based tasks.

- Semantic Segmentation — assign a class label to every pixel (or region) in the image. This is necessary for dense prediction tasks like scene understanding or medical imaging.

- Instance Segmentation — extends semantic segmentation by differentiating between multiple instances of the same class in an image. Useful when you need to detect and separate several objects of the same type.

- Which annotation type you choose significantly impacts model performance, downstream tasks, and computational needs. For example, a simple detection model might only need bounding boxes, but a robotics navigation system or medical diagnostic model could require pixel-level segmentation or landmark extraction.

Modality Beyond RGB — Multimodal Data Support in JTheta.ai

Today’s AI problems often go beyond standard RGB images. Recognizing this, JTheta.ai supports multiple modalities and specialized data types:

- Standard RGB / 2D Images — everyday photo datasets, retail images, product catalogs, general object recognition. https://www.jtheta.ai/general-image-annotation

- Geospatial & Aerial Data — for mapping, drone imagery, satellite photos, urban planning, etc. JTheta supports annotation for geospatial imagery including terrain, buildings, roads. https://www.jtheta.ai/geospatial-satellite-imaging/

- Infrared / SAR / Thermal / Remote Sensing — for surveillance, defense, night-vision, remote sensing tasks, where data may be grayscale, thermal, radar-based or low-visibility. https://www.jtheta.ai/sar-infrared-image-annotation-page/

- 3D / LiDAR / Point-cloud / Spatial Data — for robotics, autonomous driving, 3D perception, spatial understanding. JTheta offers dedicated 3D spatial annotation capabilities.https://www.jtheta.ai/3d-point-cloud-lidar-automation-platform/

- Medical Imaging (DICOM / NIfTI / 3D scans) — for radiology AI, diagnostics, segmentation of medical scans. JTheta supports DICOM annotation and NIfTI (multi-slice / 3D) annotation workflows. https://www.jtheta.ai/dicom-image-annotation/

- This multimodal support makes JTheta.ai not just an image annotation tool — but a versatile platform for any domain where visual data varies in type, format, and complexity.

JTheta.ai Workflow: From Upload to Export — Built for Developers & ML Teams

Using JTheta.ai means more than just drawing boxes. The platform is designed to integrate seamlessly into real-world ML pipelines by providing:

- AI-Assisted Pre-labeling: Before manual annotation, JTheta can auto-detect objects and propose preliminary labels, which human annotators then review and refine — drastically speeding up workflow.

- Flexible Annotation Types: As described above — bounding boxes, polygons, keypoints, segmentation masks, instance segmentation. Works across all supported modalities.

- Collaboration & Project Management: Multi-user support with roles (Annotator / Reviewer / Admin), version history, progress tracking — helpful for large teams or distributed annotation workflows.

- Export-Ready, ML-Friendly Outputs: After annotation, export your dataset in standard formats (compatible with common ML libraries or frameworks), ready for model training.

- Modality-Specific Features: For example, in medical imaging: slice navigation across planes; in 3D / LiDAR: spatial alignment, drift correction; in remote sensing / SAR: support for IR/SAR data and geospatial formats. (See respective pages)

This smooth end-to-end workflow makes JTheta.ai ideal for teams and researchers who want to go from raw data to training-ready datasets quickly and reliably.

Why This Matters: Real-World AI Use-Cases Powered by Proper Annotation

Using multimodal annotation properly unlocks powerful, production-ready AI systems in varied domains:

- Autonomous Vehicles & Robotics — spatial understanding from LiDAR + RGB + segmentation helps robots and vehicles navigate, detect obstacles, and interact with their environment.

- Defense, Surveillance, Remote Sensing — IR/SAR and geospatial annotation enable intelligence systems to operate even under low-visibility, night-time, or atmospheric/terrain-challenged conditions.

- Healthcare & Medical AI — accurate segmentation and annotation of MRI/CT scans helps build diagnostic tools for tumor detection, organ segmentation, and medical image analysis.

- Urban Planning & Agritech — aerial, drone, and satellite data annotation supports mapping, land-use classification, disaster response, agriculture monitoring.

- Retail & Ecommerce — object detection and segmentation on product images help build inventory, catalog management, catalog enhancement, and recommendation systems.

These are just a few examples. The combination of diverse modalities + annotation types + streamlined workflow lets you build robust AI systems for almost any visual domain.

Technical Tips for Annotation Projects on JTheta.ai

Recommendation

Choose annotation type carefully (box, polygon, keypoint, segmentation) |

Leverage AI-assisted pre-labeling first, then manual review |

Use collaborative workflows and roles (Annotator, Reviewer, Admin) |

Keep metadata consistent & well-defined (classes, labels, formats) |

Use modality-specific viewers for complex data (3D, medical, IR, geospatial) |

Why It Matters

The chosen annotation type affects model complexity, performance, and data size — avoid over/under-annotating. |

Speeds up work dramatically — important when working with large datasets or multimodal data. |

Helps maintain consistency & quality when multiple people annotate or review. |

Ensures clean datasets, helps downstream model training, and prevents errors. |

Conclusion: JTheta.ai — A Unified, Multimodal Annotation Platform for Real-World AI

In a landscape where AI systems are expected to handle everything from RGB photos to satellite images, infrared scans, LiDAR point-clouds, and medical imaging — a one-size-fits-all annotation tool doesn’t cut it. JTheta.ai fills that gap with:

- Support for multiple data modalities (2D, 3D, thermal, geospatial, medical)

- A full suite of annotation types (bounding box, polygon, keypoint, segmentation, instance segmentation)

- AI-assisted annotation + manual review for speed and accuracy

- Export-ready dataset formats + integration potential for ML pipelines

- Collaboration, role-based workflows, and project management features suitable for teams

Whether you are a researcher, data scientist, ML engineer, or enterprise team — JTheta.ai gives you the flexibility, technical depth, and ease-of-use to build reliable datasets that power real-world AI systems.