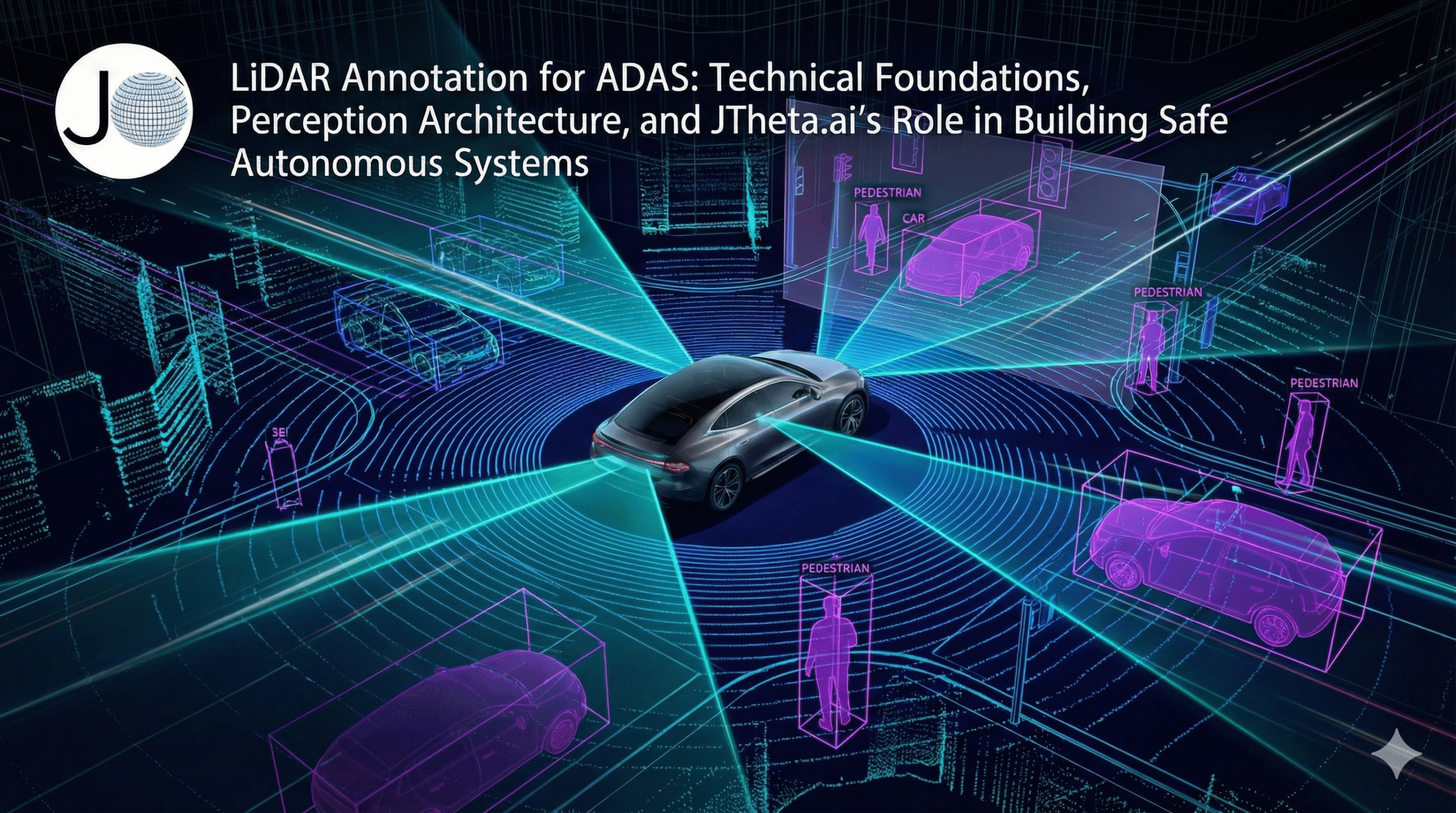

LiDAR Annotation for ADAS: Technical Foundations, Perception Architecture, and JTheta.ai’s Role in Building Safe Autonomous Systems

Introduction

Advanced Driver Assistance Systems (ADAS) and Autonomous Driving (AD) solutions depend heavily on high-quality perception models capable of understanding complex real-world environments. Modern ADAS pipelines now treat LiDAR as a primary sensor because of its ability to produce dense, accurate 3D point clouds irrespective of lighting or weather conditions.

However, LiDAR alone does not create intelligence. True ADAS reliability emerges from the annotated ground-truth datasets used to train neural networks for object detection, semantic segmentation, tracking, and prediction.

JTheta.ai provides the scalable, enterprise-grade LiDAR annotation infrastructure required to build these ground-truth datasets with OEM-level precision.

Explore platform capabilities:

https://www.jtheta.ai/lidar-annotation/

1. The Technical Role of LiDAR in ADAS Perception Systems

ADAS perception pipelines typically include:

- Object detection

- Classification

- Drivable area segmentation

- Lane and boundary extraction

- Obstacle tracking

- Trajectory prediction

LiDAR contributes directly to each layer by providing:

1.1 High-Resolution Spatial Geometry

LiDAR offers centimeter-level spatial accuracy with dense point cloud returns, enabling precise estimation of:

- Object shape

- Object distance

- Surface roughness

- Elevation and slopes

- Road topology

1.2 Temporal Stability and Velocity Estimation

Modern spinning and solid-state LiDAR sensors return up to 1.5M points/sec.

This temporal density improves:

- Object tracking

- Motion compensation

- Multi-frame occupancy mapping

1.3 Superior Performance in Edge Cases

Unlike cameras, LiDAR is resilient against:

- Overexposure

- Night driving

- Fog and glare

- Low-texture scenes

This makes LiDAR essential for ADAS Level 2+, Level 3, and autonomous operations.

2. Why LiDAR Annotation is Critical for ADAS ML Models

Neural networks in ADAS systems require supervised learning datasets consisting of annotated LiDAR frames. These annotations form the “ground truth” that models learn to replicate.

2.1 Required Annotation Types for ADAS

(i)Bounding Box Annotation (3D)

Used for the detection of pedestrians, cars, trucks, cyclists, and static roadway structures.

(ii)Semantic Segmentation

Point-level class assignment (e.g., road → curb → pole → vegetation → building).

(iii)Instance Segmentation

Differentiates individual objects of the same class.

(iv)Polyline / Surface Annotation

Required for:

- Lane markings

- Road boundaries

- Guardrails

- Drivable zone segmentation

- Ground / Non-Ground Classification

- Essential for HD mapping and trajectory planning.

JTheta.ai powers each of these modalities with precision workflows:

https://www.jtheta.ai/point-cloud-annotation/

3. Deep Dive: LiDAR–Camera Sensor Fusion and Why Annotation Consistency Matters

ADAS systems rarely depend on one sensor alone. Instead, they operate using sensor fusion, typically combining:

- LiDAR (geometry + depth)

- RGB camera (texture + color)

- Radar (velocity + range)

3.1 Fusion Pipelines Require Unified Annotation

To ensure accurate 3D perception, datasets must be annotated with:

- Synchronized timestamps

- Calibrated coordinate systems

- Cross-view object consistency

- Shared class schema

3.2 Annotation-Driven Calibration

High-quality annotations allow ML engineers to:

- Validate extrinsic/intrinsic sensor calibration

- Align point clouds with 2D frames

- Evaluate reprojection errors

JTheta.ai’s multimodal annotation suite ensures this consistency across large datasets:

https://www.jtheta.ai/multiple-image-annotation-techniques/

4. Technical Challenges in LiDAR Annotation and How JTheta.ai Solves Them

LiDAR annotation is far more complex than image annotation due to:

4.1 Sparse & Irregular Point Distribution

Urban environments produce variable-density returns.

JTheta.ai resolves this using:

- Density-aware annotation tools

- 3D voxelization-assisted rendering

- Adjustable point cloud slicing

4.2 Occlusions & Overlapping Geometry

Vehicles, poles, and pedestrians may overlap in 3D space.

JTheta.ai uses multi-view annotation modes to correct occlusions.

4.3 Multi-million Point Frames

Raw LiDAR sequences can exceed 100MB per frame.

Our infrastructure supports:

- GPU-accelerated rendering

- Distributed annotation sessions

- Streaming-based dataset loading

4.4 Automotive-Grade Quality Requirements

ADAS models require <2% annotation error rates.

JTheta.ai maintains quality with:

- Multi-level reviewer workflows

- Automated rule-based QA

- Class ontology governance

Explore JTheta.ai platform:

https://www.jtheta.ai/

5. ADAS Use Cases Powered by Annotated LiDAR Datasets

5.1 Autonomous Lane Assist & Highway Pilot

LiDAR segmentation helps classify:

- Lane geometry

- Barriers

- Road curvature

- Drivable vs non-drivable surfaces

5.2 Urban Autonomous Navigation

City driving demands:

- Pedestrian detection

- Occlusion management

- Traffic sign recognition

- Curb & sidewalk mapping

5.3 HD Mapping & Localization

Annotated point clouds are core inputs for HD maps used by:

- Autonomous shuttles

- Robotaxis

- Cargo AVs

5.4 Predictive Traffic Systems

3D object tracking enables long-term motion prediction for safer ADAS decisions.

6. Why JTheta.ai Is the Optimal Partner for LiDAR + ADAS Annotation

6.1 Purpose-Built for 3D, ML, and Geospatial Data

Unlike generic labeling tools, JTheta.ai provides:

- Native LAS, LAZ, PCD, E57 support

- Multi-sensor fusion interfaces

- High-performance 3D visualization

6.2 Automotive Ontology Support

Custom label schemas for OEMs, Tier-1 suppliers, and autonomous startups.

6.3 Production-Scale Annotation Pipelines

JTheta.ai processes millions of LiDAR frames for enterprise workflows with:

- Batch automation

- API-based dataset ingestion

- Built-in quality benchmarking

6.4 End-to-End Delivery

From data preparation → annotation → QA → export into AV/ADAS formats.

Conclusion: LiDAR + ADAS Innovation Depends on High-Quality Annotation

As vehicles transition toward higher levels of autonomy, the accuracy and scalability of LiDAR annotation becomes a competitive differentiator.

ADAS perception models are only as reliable as the datasets they are trained on.

JTheta.ai delivers the annotation precision, multimodal workflows, and enterprise-grade infrastructure required to build the next generation of safe, robust ADAS systems.

Explore LiDAR annotation solutions:

https://www.jtheta.ai/lidar-annotation/

Explore 3D point cloud workflows:

https://www.jtheta.ai/point-cloud-annotation/