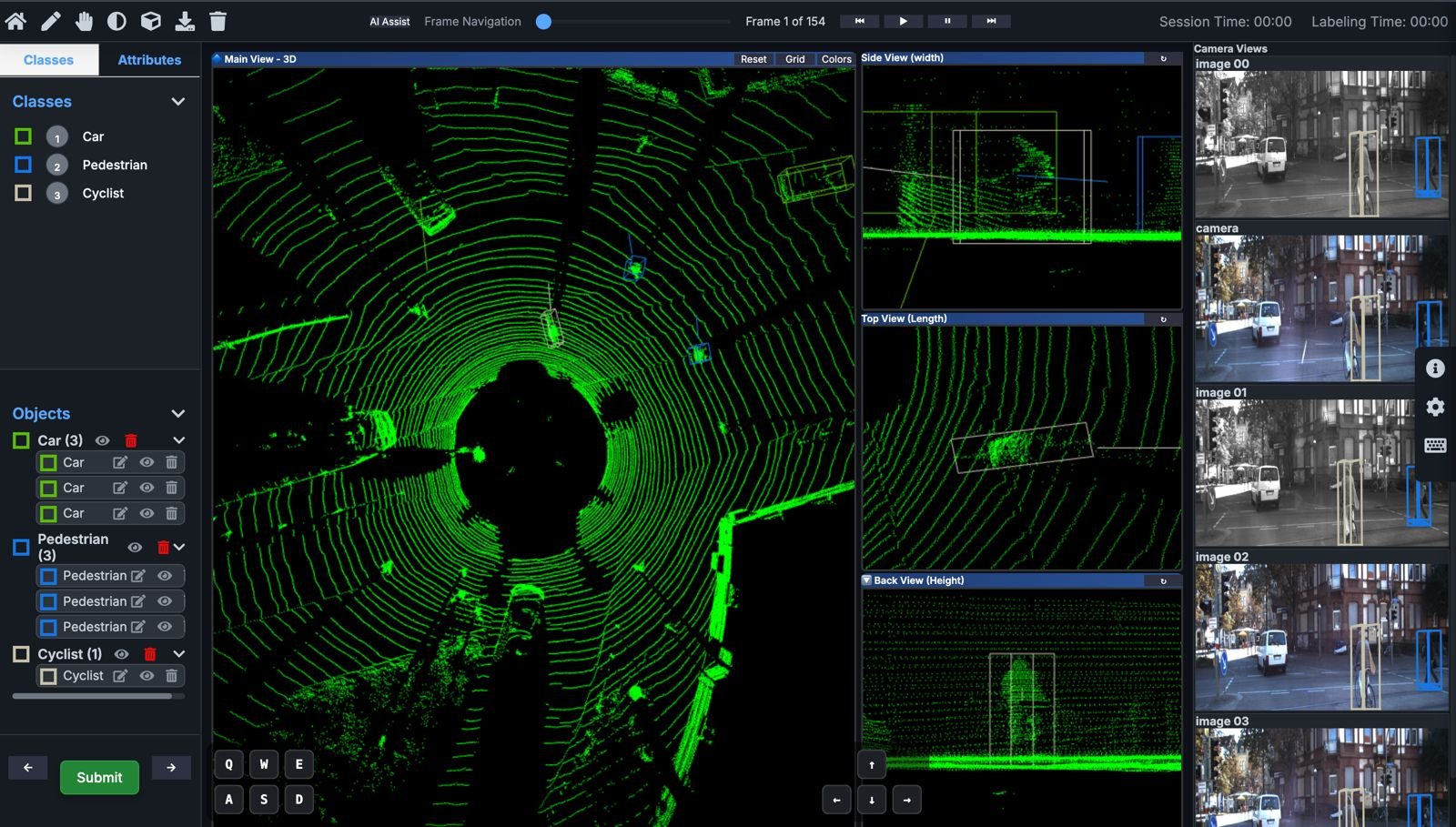

Guide to LiDAR Annotation: Building 3D Datasets for Autonomous Systems, Robotics, and Smart Cities

Introduction

LiDAR (Light Detection and Ranging) has become a cornerstone technology for 3D perception. By emitting laser pulses and measuring the return times, LiDAR sensors generate dense 3D point clouds—a spatially rich representation of the environment. These point clouds are essential for training AI/ML models in autonomous driving, robotics, smart cities, environmental monitoring, and defense applications.

However, raw LiDAR data is meaningless without precise annotation pipelines. Annotating millions—or even billions—of 3D points into meaningful objects (vehicles, pedestrians, infrastructure, vegetation) is what enables machine learning algorithms to achieve robust 3D object detection, tracking, and scene segmentation.

🔹 Advanced Use Cases of LiDAR Annotation

1. Autonomous Driving & ADAS

– Annotating 3D bounding boxes, cuboids, and semantic segmentation for vehicles, pedestrians, cyclists, traffic lights, and road boundaries.

– Multi-modal fusion: Combining LiDAR with camera (RGB) and RADAR data for enhanced 3D scene understanding.

– Critical for training autonomous vehicles and Advanced Driver Assistance Systems (ADAS) to handle real-world edge cases.

2. Smart Cities & Digital Twins

– Urban-scale LiDAR annotation for infrastructure modeling, mapping utilities, traffic flows, and structural safety.

– Supports digital twin simulations for predictive urban planning, traffic optimization, and disaster management.

3. Robotics, UAVs & Defense

– Training drones and ground robots to navigate unstructured environments using annotated 3D maps.

– Military & defense: Object recognition in cluttered terrains, low-visibility conditions (fog, smoke, night), and SAR/EO fused LiDAR datasets.

– Essential for SLAM (Simultaneous Localization and Mapping) in robotics research.

4. Forestry & Environmental Analytics

– Tree segmentation for biomass estimation, carbon tracking, and canopy height modeling.

– Terrain analysis and watershed modeling for environmental conservation and climate monitoring.

5. Construction, Mining & Industrial Applications

– Site surveying: Accurate volume estimation of stockpiles, excavation tracking, and structural monitoring.

– Mining operations: Safety zone mapping and monitoring of hazardous zones.

🔹 Manual vs. AI-Assisted LiDAR Annotation

Aspect | Manual Annotation | AI-Assisted Annotation |

Accuracy | Requires 3D experts labeling point-by-point. Very precise, but time-intensive. | AI performs pre-labeling (auto-cuboids, segmentation, clustering); humans validate and refine. |

Speed | Hours per single point cloud. | Cuts annotation time by 70–90% with pre-trained deep learning models. |

Scalability | Not scalable for petabyte-scale LiDAR datasets. | AI enables massive-scale annotation pipelines for autonomous fleets and smart cities. |

Cost | High due to complexity and expert involvement. | Cost-effective, blending automation with expert review. |

Best Use Case | Research datasets, rare object categories. | Large-scale autonomous driving, robotics, defense, and city deployments. |

🔹 Challenges in LiDAR Annotation

– Class imbalance: Vehicles dominate urban datasets while rare objects (e.g., construction cones, wildlife) are underrepresented.

– Occlusion & sparsity: LiDAR struggles with dense urban environments and long-range perception.

– Multi-sensor alignment: Fusing LiDAR, RADAR, and RGB data requires precise calibration for annotation.

– Quality assurance: Human-in-the-loop verification is mandatory for safety-critical AI systems.

Conclusion

LiDAR annotation is the backbone of 3D perception AI. By combining AI-powered automation with expert validation, organizations can build scalable, cost-efficient, and high-precision 3D training datasets. Whether for autonomous vehicles, robotics, or digital twins, LiDAR labeling unlocks the ability for machines to see, understand, and navigate the world in three dimensions.